How is an algorithmic bias composed?

Algorithms and codes developed by humans get as much or more wrong than we do, but how do they work?

Available in:

By Sasha Muñoz Vergara. Published July 19, 2021

Facial recognition at airports, what political ads you see, how your application for your future jobs are vetted, how to diagnose if you have Covid-19 or even predict the risk of fire in your home are all decisions that technology is involved in. Humans are prone to make mistakes, but that doesn’t mean that algorithms are necessarily better.

What is algorithmic bias?

An algorithm is defined and ordered instructions for solving a problem or performing a task on a computer. Much of all the knowledge we share is transmitted in the form of a sequence of steps to follow. But these systems can be biased depending on who builds them, how they are developed, and how they are finally used. That is known as algorithmic bias.

It’s hard to figure out exactly how systems can be susceptible to that kind of bias. But, according to a Vox article on the subject, “this technology often operates in a corporate black box, meaning we don’t know how a particular AI or algorithm was designed, what data helped build it, or how it works.”

Algorithms are trained with data

Machine learning, or “automatic learning” (a type of artificial intelligence), is a form of “training.” It involves exposing a computer to a lot of data so that the computer then learns to make judgments, or predictions, about the information it processes based on the patterns it observes.

For example, let’s say you want to train a computer system to recognize whether an object is a book based on some factors, such as its texture, weight, and dimensions. A human could do it, but a computer could do it more quickly.

“To train the system, you show the computer the metrics attributed to a bunch of different objects. Then, you give the computer system the metrics for each object and tell it when the objects are books and not. Then, after continuous testing and refinement, the system is supposed to learn what a book indicates and, hopefully, be able to predict in the future whether an object is a book, based on those metrics, without human help,” says this video from Google explaining how biases work in machine learning.

We are all biassed towards one way of thinking. Imagine trying to teach only your way of thinking to a computer. It would be missing an infinitude of data for the machine to feed its database and have precisely the answer you expect or someone else requires. It seems relatively straightforward. Include in the first batch of data a range of different and unrepeatable types of items, but it is more complex than that.

What is the impact of algorithmic biases?

These systems are often applied to situations that have far more severe consequences than the task of identifying books and in scenarios where there is not necessarily an “objective” answer. Usually, the batch of data entered into the algorithms is not complete or carefully selected to fall into discriminatory bias.

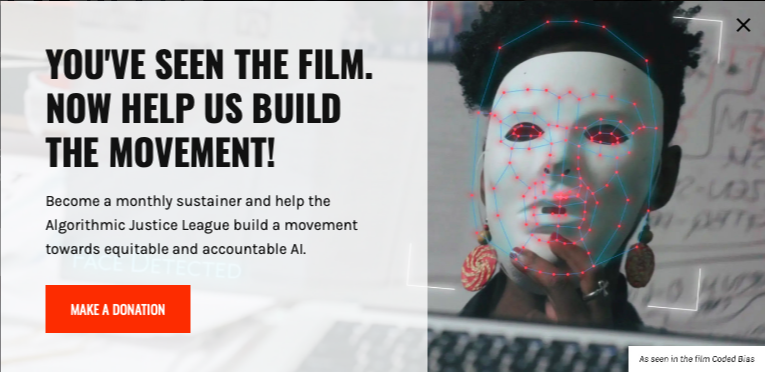

An example of this was exposed in the research Gender Shades: Intersectional Accuracy Disparities in Commercial Gender Classification, also discussed in the documentary Coded Bias, a documentary directed by Shalini Kantayya. This study navigated through others to conclude that many of the tools used today for facial recognition rely on algorithms trained with biased and discriminatory data, mainly based on gender and race.

Another study found that a computer programmed to learn the English language automatically contains discriminatory biases toward black people. What facial features was this program picking up for analysis? Is it the physical features of certain ethnic groups that are discriminated against in the judicial system? Algorithms are powerful, but more so is the type of data you feed those algorithms to teach them to bias.

How to fight against algorithmic bias?

MIT graduate student Joy Buolamwini was working with facial analysis software when she noticed a problem: the software did not detect her face because the people who coded the algorithm had not taught her how to identify a wide range of skin tones and facial expressions structures. That’s how she founded the Algorithmic Justice League, whose goal is to raise awareness of the social implications of artificial intelligence through art and research.

There is hope for this. Buolamwini points out that we are not limited to just one database to train computers. Instead, we can create as many data sets as possible, which “more comprehensively reflect a portrait of humanity,” as she mentioned in one of his Ted Talks.

Another solution to make algorithms more accurate would be to regulate them by law, but the current regulatory framework for technology is mainly reactive. Consider the Federal Trade Commission’s (FTC) $5 billion fine against Facebook for data privacy violations. It contains virtually no technical enforcement mechanisms, only human and organizational ones.

According to this article on ethical algorithms, an alternative approach is to allow technology regulators to be proactive in their enforcement and investigations. Thus, if, for example, there is a gender bias, black-box it could be discovered by regulators in a controlled, confidential, automated experiment with black box access to the model that will then market.